A Universe is a complete digital environment that connects data, information, and knowledge across diverse domains. It extends beyond IT infrastructure to include ALL data sources like physical assets, business processes, regulatory constraints, data lifecycles, and knowledge flows. A Universe can be understood through different perspectives:

It also encompasses events, transactions, state changes, and lifecycle transitions, thereby enabling reasoning

about system evolution. In this sense, a Universe is a living ecosystem where raw data flows into information,

and information evolves into knowledge, shaping how people, machines, and structures interact securely and

intelligently.

UaC (Universe as Code) is the forward-thinking extension of the well-known IaC (Infrastructure as

Code)

paradigm. While IaC focuses on describing and automating IT infrastructure such as servers, networks, and

software stacks, UaC embraces a much broader and more complex scope.

In UaC, the principle of declarative,

code-driven definition extends not only to infrastructure but also to physical assets, business processes,

regulatory constraints, data lifecycles, and knowledge flows.

Just as IaC transformed system

administration into programmable workflows, UaC envisions an environment where entire digital-physical

ecosystems can be described, evolved, and reasoned about through code. This approach anticipates future

needs

where interoperability, compliance, intelligence, and evolution of systems are orchestrated holistically,

making UaC a natural evolution beyond IaC.

Open

Traditional Infrastructure as Code tools such as Terraform are effective for provisioning and configuration but are not designed for real-time, event-driven orchestration across diverse systems. OpenUniverse fills this gap by enabling triggers, workflows, and responses to be defined as code and executed dynamically across cloud services, on-premise systems, IoT devices, and legacy applications.

The platform ensures that orchestration logic is versioned, signed, and timestamped for compliance and traceability, while maintaining security through cryptographic chaining of records. Because it is system-neutral, it integrates across heterogeneous technologies without vendor lock-in.

By combining real-time coordination with auditability and long-term verifiability, OpenUniverse extends the benefits of IaC into the operational domain, offering a structured and predictable approach to automation that aligns with regulatory and governance needs.

OpenUniverse operates in a world without a single point of control. Unlike traditional systems that rely on centralized servers or orchestration engines, OpenUniverse treats every component — events, jobs, triggers, processors, and systems — as part of a decentralized, self-organizing network.

In OpenUniverse, workflows are not dictated by a central engine. Instead, each component communicates through cross-referenced event streams, allowing jobs and triggers to react dynamically to changes anywhere in the system. This model ensures:

OpenUniverse operates in several stages to transform static document definitions into a dynamic,

event-driven infrastructure.

Before any processing begins, OpenUniverse optionally performs a self-check to verify its own integrity:

Every record produced by OpenUniverse is secured and traceable through multiple layers of protection:

The architecture is based on documents as the primary abstraction. Each component—events, jobs, triggers,

systems, and processors—is described as a structured document, with the RootDocument serving as the central

blueprint. This model makes the environment self-describing, auditable, and easy to evolve.

Execution is fully event-driven. Events from calendars, schedulers, or publishers activate jobs, which

process

them through defined processors and then act on target systems. Instead of static configuration,

relationships

between jobs, events, and systems are discovered dynamically at runtime, creating adaptive orchestration

flows.

Runs as a lightweight client, not a server — easy to integrate into existing environments without adding infrastructure overhead.

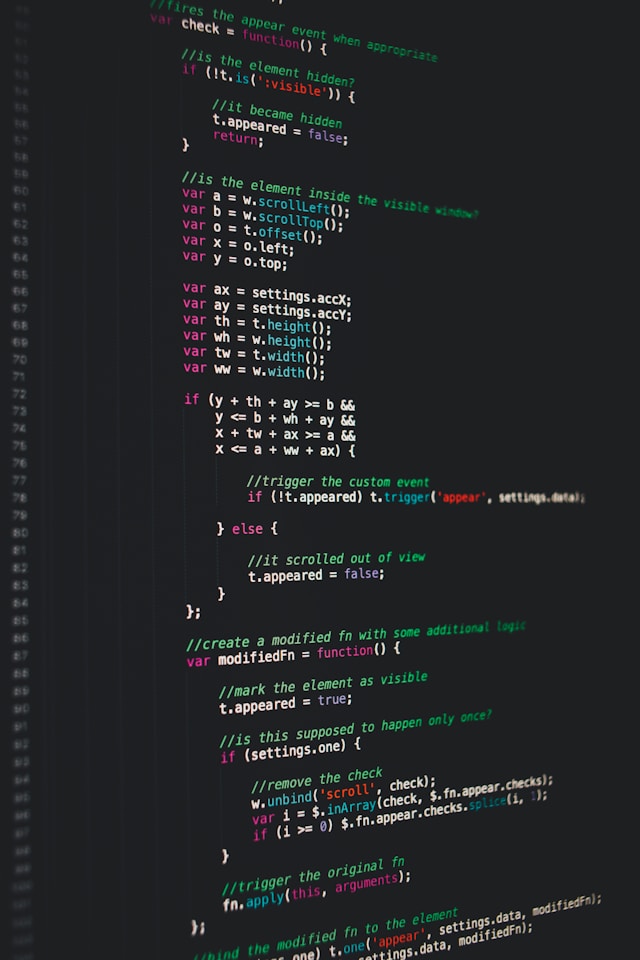

Documents can be authored in JSON, YAML, or JavaScript.

When JSON saved with a .js extension, JavaScript-style comments are allowed.

In addition, the MD (Markdown) format is supported, which, along with text, can include JSON/YAML documents and plugins.

At startup, OpenUniverse transforms all MD documents into JSON/YAML and extracts plugins from the Markdown.

Version control is built directly into the platform, ensuring that every change to systems, jobs, events, and relationships is tracked and managed. This makes history, auditing, and rollback an integral part of how the platform operates, not an external add-on.

Components are discovered dynamically based on their tags, attributes, etc., rather than relying on hard-coded lists. Relationships describe how core components interact with each other. They are established through search queries over tags and attributes, allowing systems, jobs, events, and processors to be linked in a flexible and adaptive way.

Each document can define pre-run constraints that validate conditions before a processing loop begins. These checks act as safeguards, ensuring that jobs and processes only start when prerequisites are met.

Employs continuous, parallel, native processes that stream data directly: Input: read from stdin. Output: write to stdout This ensures seamless composition with other tools and pipelines, staying true to the UNIX philosophy.

The platform is fully independent of programming languages. It can orchestrate jobs, processes, and events regardless of whether systems use Java, Python, JavaScript, C++, or any other language.

Everything is modeled as a system — from hardware such as IoT devices, robots and CNC machines to enterprise databases and NGFWs. This flexible concept makes it possible to orchestrate and observe heterogeneous environments with a single approach.

Jobs and processes can be activated by events — such as calendars and schedules timepoints, or triggered from external signals. Triggers define when and why execution starts.

Event processors transform, filter, route and response on events across affected systems.

A job executes a set of processes across one or many systems. Jobs define what to run, where to run it, and which results to collect. They provide a consistent way to express distributed execution.

You’re not locked into a single backend tool. Export messages asynchronously to any backend in real-time:

Abstract DMQ ensures that no message is ever lost — automatically capturing and preserving undeliverable (“dead”) messages across the universe for recovery, analysis, and compliance. It can use any backend for storage and management of dead messages.

Every record produced by OpenUniverse is protected through multiple layers of security, ensuring authenticity, integrity, and long-term verifiability. These guarantees provide a strong foundation for regulatory compliance, legal auditability, and adherence to industry standards.

The platform is released under the MIT License, one of the most widely adopted open-source licenses. It grants broad freedom to use, modify, and distribute the software with minimal restrictions.

Turn your MVP into a scalable, production-ready reality from day one. OpenUniverse lets you focus on building your core product — while it takes care of integration, orchestration, and automation. Go from prototype to global scale without rewriting your architecture. Embrace a platform that’s flexible, language-agnostic, and built to adapt as your vision grows.

Today’s IT landscape is complex, with diverse protocols, multi-cloud services, and growing use of asynchronous communication. Enterprise Integration Patterns (EIP) offer proven solutions and a shared vocabulary to design reliable, adaptable integrations that handle complexity and failures gracefully.

Works seamlessly across platforms, clouds, and systems. Connect to cloud services, on-prem systems, APIs, message queues, databases, or custom endpoints. The platform speaks your language and adapts to your ecosystem — no need to conform to a rigid stack.

Engineered for real-time execution and scalable throughput. Event flows are processed as they happen. The platform supports asynchronous triggers, backpressure handling, and distributed execution to meet the demands of fast-moving systems.

Empower your engineers to automate safely and consistently. With built-in support for version-controlled workflows, audit trails, policy integration, and modular architecture, teams can build reliable automation without sacrificing control or visibility.

Bring your own tools, scripts, and runtimes. Use any language or executable interface to build event sources, processors, or responders. Whether it's a shell script, Python program, or compiled binary — if it runs, it integrates.

Design your infrastructure like building with blocks — intuitive, modular, and endlessly flexible. Define every component as code, integrate any system at any scale, and use your preferred tools and languages. Build fast, evolve freely, and stay in control.

Every outcome is deterministic, transparent, and cryptographically verifiable. No AI-driven ambiguity—just open logic, signed data, and reproducible processes. Designed for those who demand proof over promises.

1. A Scalable, event-driven

architecture for designing interactions across heterogeneous devices in

smart environments

Ovidiu-Andrei Schipor, Radu-Daniel Vatavu, Jean Vanderdonckt.

Information and Software Technology, Vol. 109 (2019), 43–59,

2. The Power of Event-Driven

Architecture: Enabling RealTime Systems and Scalable Solutions

Adisheshu Reddy Kommera.

Turkish Journal of Computer and Mathematics Education, Vol. 11 (2020), 1740-1751,

3. Exploring event-driven

architecture

in microservices- patterns, pitfalls and best practices

Chavan, Ashwin.

International Journal of Science and Research Archive, Vol. 4 (2021), 229-249,

Export messages asynchronously to any backend in real-time:

The MIT License provides freedom to use, modify, and integrate the software in any project — open source or commercial. With no copyleft, legal barriers, or hidden constraints, it enables building, scaling, and innovation on flexible terms.